Building a Perplexity Clone with 100 lines of code

I’ve been a huge fan of AI search apps like Perplexity.ai, Phind.com, and You.com and have been a heavy user of them for about a year. If you’re not familiar, these products are an AI-powered question-answering platforms. They use large language models like ChatGPT to answer your questions, but improves on ChatGPT by pulling in accurate and real-time search results to supplement the answer (so no “knowledge cutoff”). Plus, they list citations within the answer itself which builds confidence it’s not hallucinating and allows you to research topics further.

So I wanted to know how it works. And it’s surprisingly simple at its core.

Here’s what I learned in building a version of it in about 100 lines of code.

Of course, to build amazing products like Perplexity, Phind, and you.com, you’ll need major investments in a delightful user experience, plenty of extra features, fine-tuned models, custom web-crawling, and more. Ultimately, it’ll be much more than just 100 lines of code!

The Basics

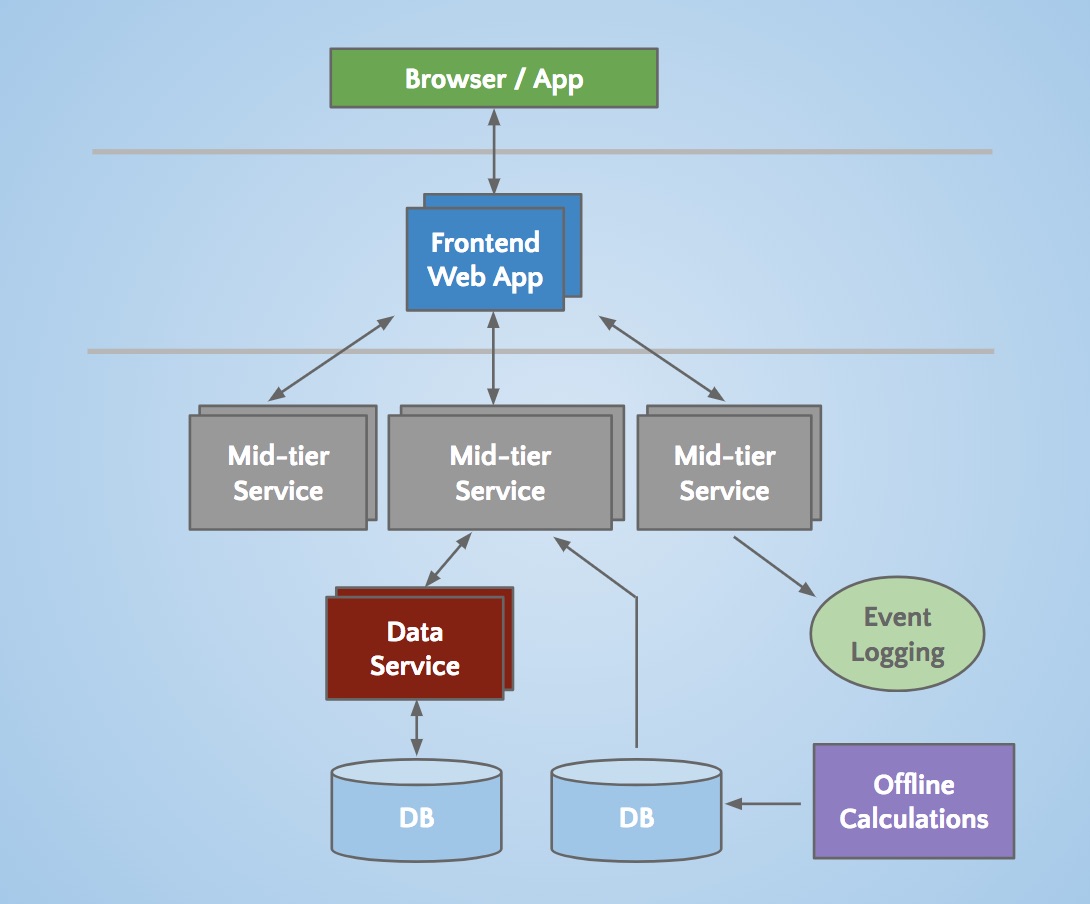

To create your own, you need to make just two API calls:

- Call a search engine API like Bing, Google, or Brave using the user’s query

- Craft a prompt to an LLM like ChatGPT passing along the search results in the context

Using search results to augment and improve upon an LLM’s answer

With the basics out of the way, let’s get into the details.

Step 1: Get Search Results for a user query

The main challenge with LLMs like ChatGPT is that they have knowledge cutoffs (and they occasionally tend to hallucinate). It’s because they’re trained on data up to a specific date (eg Sep 2021). So if you want an answer to an up-to-date question or you simply want to research a topic in detail, you’ll need to augment the answer with relevant sources. This technique is known as RAG (retrieval augmented generation). And in our case we can simply supply the LLM up-to-date information from search engines like Google or Bing.

To build this yourself, you’ll want to first sign up for an API key from Bing, Google (via Serper), Brave, or others. Bing, Brave, and Serper all offer free usage to get started.

There’s plenty of ways to make a remote call, but here’s a basic way of using cURL / PHP to call Brave’s search API:

$params = array('q' => $query);

$ENDPOINT = "https://api.search.brave.com/res/v1/web/search";

$url = $ENDPOINT . '?' . http_build_query($params);

$headers = array(

'X-Subscription-Token: ' . $BRAVE_KEY,

'Accept: application/json'

);

$curl = curl_init();

curl_setopt($curl, CURLOPT_HTTPHEADER, $headers);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_ENCODING, 'gzip');

curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1);

$response = curl_exec($curl);

curl_close($curl);These APIs will return the URL link, URL title, a description or snippet of the page, and more. After parsing the results, you are ready to construct a prompt to ChatGPT or another LLM of your choice.

Wait, just the snippet? Is that enough data for this to work?

Yes! Search results will typically only show a few hundred characters worth of content related to the user’s query. But it turns out, search engines are highly optimized at finding the relevant part of the page that corresponds to the user’s query. They are tuned at query understanding and often perform query rewriting or expansion to increase the recall and quality of the search results.

There’s a surprising amount of relevant data just within these short snippets

So while you could scrape the entire page, simply leveraging the great ranking and retrieval those search engines are doing will save you a lot of work. Plus, you’ll be able to respond to the user very quickly by doing this. Scraping the full page content is error-prone and slow, so your users will just be waiting around. And speed matters in search. Users want their answer not only correct, but delivered quickly.

Step 2: Decide the LLM you want to use

Here, you’ll need to decide which LLM and inference provider to use. For LLMs, each model has unique strengths, different costs, and different speeds. For example, gpt-4 and Claude 3 Opus are very accurate but expensive and slow. If you want to track the best models, here’s a ranking leaderboard. When in doubt, I’d recommend using chatgpt-3.5-turbo from OpenAI. It’s good enough, cheap enough, and fast enough to test this out.

Next, you’ll need to sign up for an API key from an LLM provider. There’s a lot of inference providers to choose from right now. For example there’s OpenAI, Anthropic, Anyscale, Groq, Cloudflare, Perplexity, Lepton, or the big players like AWS, Azure, or Google Cloud. I’ve used many of these with success and they offer a subset of current and popular closed and open source models.

Fortunately, most of these LLM serving providers are compatible with OpenAI’s API format, so switching to another provider / model is only minimal work (or just ask a chatbot to write the code!).

Test any queries or view code samples on OpenAI’s playground

Step 3: Craft a prompt to pass along the search results in the context window

When you want to ask an LLM a question, you can provide a lot of additional context. Each model has its own unique limit and some of them are very large. For gpt-4-turbo, you could pass along the entirety of the 1st Harry Potter book with your question. Google’s super powerful Gemini 1.5 can support a context size of over a million tokens. That’s enough to pass along the entirety of the 7-book Harry Potter series!

Fortunately, passing along the snippets of 8-10 search results is far smaller, allowing you to use many of the faster (and much cheaper) models like gpt-3.5-turbo or mistral-7b.

In my experience, passing along the user question, custom prompt message, and search result snippets are usually under 1K tokens. This is well under even the most basic model’s limits so this should be no problem.

Here’s a sample prompt I’ve been playing around with you can use (Hat-tip to the folks at Lepton AI who open-sourced a similar project which helped me refine my prompt).

You are an assistant written by Josh Clemm. You will be given a question and you will respond with an answer to the question. It must be accurate, high-quality, and expertly written in a positive, interesting, and engaging manner. It must be informative and in the same language as the user question. You will be provided a set of citations for the question. Each will start with a reference number like [citation:x], where x is a number. Always use the related citations and cite the citation at the end of each sentence in the format [citation:x]. If a sentence comes from multiple citations, please list all applicable citations, like [citation:2][citation:3]. Here are the provided citations:You will then need to dynamically add the sources / citations from the search results. For a user query like “best vegas hotels for families”, the citations would look a bit like this:

[citation:1] As for a hotel, I would echo the Mandalay Bay recommendation. There is a wide variety of restaurants, the pool complex is great, the Shark Reef is fun for families

[citation:2] Treasure Island is closest to the center strip and action as well as walking distance. Excalibur and Circus Circus for kids as 2nd floor has games galore.The language model will then return the answer with citations like the following:

When planning a family trip to Las Vegas, there are excellent hotel options ideal for families. Mandalay Bay is a standout choice, offering a wide variety of amenities suitable for all ages. It features numerous offerings like pools, arcades, and a Shark Reef [citation:1]. Other great options include Treasure Island, Excalibur, and Circus Circus [citation:2].And that’s it, you now have a working up-to-date answer engine with citations!

Step 4: Add Related or Follow Up Questions

One of the nice features of Perplexity and others is how they suggest follow up questions. Fortunately, this is easy to replicate.

To do this, you can make a second call to your LLM (in parallel) asking for related questions. And don’t forget to pass along those citations in the context again.

Or, you can attempt to construct a prompt so that the LLM answers the question AND comes up with related questions. This saves an API call and some tokens, but it’s a bit challenging getting these LLMs to always answer in a consistent and repeatable format.

Here’s an example prompt that has the LLM do both:

You will be given a question. And you will respond with two things.

First, respond with an answer to the question. It must be accurate, high-quality, and expertly written in a positive, interesting, and engaging manner. It must be informative and in the same language as the user question.

Second, respond with 3 related followup questions. First print "==== RELATED ====" verbatim. Then, write the 3 follow up questions in a JSON array format, so it's clear you've started to answer the second part. Do not use markdown. Each related question should be no longer than 15 words. They should be based on user's original question and the citations given in the context. Do not repeat the original question. Make sure to determine the main subject from the user's original question. That subject needs to be in any related question, so the user can ask it standalone.

For both the first and second response, you will be provided a set of citations for the question. Each will start with a reference number like [citation:x], where x is a number. Always use the related citations and cite the citation at the end of each sentence in the format [citation:x]. If a sentence comes from multiple citations, please list all applicable citations, like [citation:2][citation:3].

Here are the provided citations:And like before, you then need to dynamically add the citations. And doing so still is less than our 100 lines of code.

I’ve open-sourced this more basic version in PHP. Give it a try!

Step 5: Make it look a lot better!

To make this a complete example, we need a usable UI. And of course we’ll now go well beyond our 100 lines of code. I’m not the best web / frontend engineer, so won’t go into these details. But I will share with you the UI I open sourced (I’m using Bootstrap, jquery, and some basic CSS / javascript to make this happen).

These questions are making me hungry

The key parts I added to replicate the Perplexity experience are:

- The answer streams back to the user (improving perception of speed)

- The citations are replaced by a nicer in-line UI with a clickable popup for the user to learn more

- The sources considered are included after the answer in case the user wants to explore further

- A second call in parallel is made to a search API for images to enhance and supplement results

- Markdown and code syntax highlighting are used if necessary

Markdown and code syntax highlighting support!

Advanced Ways to Make it even Better

Query Understanding

Search engines today use natural language processing to determine the type of query a user has typed or to identify the entity. And based on that often will show different results. For example, a question like “top news” will retrieve actual news content vs. just returning websites that have news results. A question about a known person will fetch a “knowledge card” for them to supplement any searched results. Queries that are more basic questions (eg starts with “what” or “why”) may trigger an actual answer at the top of the results.

AI search apps don't do well with queries related to up to date sports scores unless manual query understanding is done

So if we really want to improve upon search engines today, we need to replicate that query understanding and custom response behavior.

To get more specific, let’s consider searching for news. You could check if the user query says “top news”, and if so, instead of making a search API call, you make a news API call. Or you might be regularly fetching and storing news articles, and you just need to fetch those. Then, you simply pass along those results along with your prompt to the LLM. At which point, the LLM just functions to summarize the articles you supplied.

Follow ups and Threads

All AI search apps offer the ability to ask custom follow up questions. In order to be an actual followup, you’ll need to add the original question and answer into the follow up prompt. You would use a very similar technique to adding web sources as done above.

If the user continues to follow up on the followup (eg a full question thread), you may start getting close to the maximum token length of the context window. At this point, the solution gets a bit more complex and beyond the scope of this article. But you essentially have to start storing, chunking, and using your own vector search to pass along the most relevant parts of the past thread. You got this!

Pro Search

Let’s say a topic is particularly difficult to answer. Or is more research oriented. Right now, our current solution is optimized for speed (and cost). Within about a second, we can perform both our search and LLM calls and begin to stream back the answer. But users may have some queries that don’t need to be answered quickly.

There’s some ways of replicating that.

First, in your search call, simply fetch more than the default 8-10 results.

Second, you could start workers to parse and scrape each search result, pulling in way more content for your context. Keep in mind this can introduce significant work since you really should chunk the document into relevant summarizations. Search engines like Google use extractive summarization for their snippets so you are really trying to replicate that work and they have a pretty large head start on you!

Finally, you’ll want to use a more advanced LLM, like gpt-4 or Claude 3 Opus. Those are trained on significantly more data. And therefore are far more accurate at inference. Plus, they can take in far more context (like those fully scraped results). But they are considerably slower and cost a lot more, so you’ll want to only use this pro or deeper search when appropriate.

Improving Answer Quality

To further improve quality of your results, there’s a few things to consider. First, you can always utilize the current most powerful general models like Claude 3 Opus and GPT 4 Turbo. Take a look at the LLM ranking leaderboard to see who’s the current top model.

Second, you can ask the user to provide the type of question they are asking (or attempt to infer it). Perplexity has focus options like “Writing”, “Academic”, “Math”. And with that focus, you can then utilize a more appropriate LLM for that. Take a look at this leaderboard to determine the best models for specific tests. For example, some models are better at coding, some are better at creative writing, and some are better at general Q&A.

Finally, you can chain a number of LLMs together as Agents. Andrew Ng gave a talk on AI agentic workflows where instead of just using one powerful model, you can use a number of more focused and faster / cheaper models together. This technique significantly increases the quality of the final output. For our use case, you could have one agent perform the initial query and a second that checks and scores it for accuracy. This works particularly well for making sure out-of-date sources aren’t part of the final answer.

Test it out

First, feel free to explore my open source PHP and basic web code. It works well running on some of the most basic web servers (like on this website).

But to make this example a bit more robust, I ended up porting the PHP code over to Javascript and am using Cloudflare (with workers and a KV cache) to up the production level a bit.

Go check out that version at https://yaddleai.com

Final thoughts

These RAG-based AI search apps are fascinating. They are truly a blend of LLM inference and classic search retrieval and ranking. Is the model answering the question itself but using the search snippets? or is the model simply summarizing the already highly relevant snippets?

Daniel Tunkelang offers a similar take on RAG search apps: “the role that the generative AI model plays is usually secondary to that of retrieval. The name ‘retrieval-augmented generation’ is a bit misleading — it might be better to call it ‘generative-AI-enhanced retrieval’”.

In any case, combining search + LLMs proves to be a powerful and fairly straightforward way to improve upon the classic question / answer framework.

Let me know if you end up building something like this yourself and share any lessons you learned!